KFF has conducted this annual survey of employer-sponsored health benefits since 1999. Since 2020, KFF has employed Davis Research LLC (Davis) to field the survey. From January to July 2025, Davis interviewed business owners as well as human resource and benefits managers at 1,862 firms.

SURVEY TOPICS

The survey includes questions on the cost of health insurance, offer rates, coverage, eligibility, plan type enrollment, premium contributions, employee cost sharing, prescription drug coverage, retiree health benefits, and wellness programs.

Firms that offer health benefits are asked about the attributes of their largest HMO, PPO, POS and HDHP/SO plans. Exclusive provider organizations (EPOs) are grouped with HMOs, and conventional (or indemnity) plans are grouped with PPOs.

Plan Definitions:

- HMO (Health Maintenance Organization): A plan that does not cover non-emergency services provided out of network.

- PPO (Preferred Provider Organization): A plan that allows use of both in-network and out-of-network providers, with lower cost sharing for in-network services and no requirement for a primary care referral.

- POS (Point-of-Service Plan): A plan with lower cost sharing for in-network services, but that requires a primary care gatekeeper for specialist or hospital visits.

- HDHP/SO (High-Deductible Health Plan with a Savings Option): A plan with a deductible of at least $1,000 for single coverage or $2,000 for family coverage, paired with either a health reimbursement arrangement (HRA) or a health savings account (HSA). While HRAs can be offered with non-HDHPs, the survey collects data only on HRAs paired with HDHPs. (See the introduction to Section 8 for more detail on HDHPs, HRAs, and HSAs.)

To reduce respondent burden, questions on cost sharing for office visits, hospitalization, outpatient surgery, and prescription drugs are limited to the firm’s largest plan. Firms offering multiple plan types report premium contributions and deductibles for their two largest plans. Within each plan type, respondents are asked about the plan with the highest enrollment.

Firms report attributes of their current plans as of the time of the interview. While the survey fielding begins in January, many firms have plan years that do not align with the calendar year. In some cases, firms may report data based on the prior year’s plan. As a result, some reported attributes—such as HSA deductible thresholds—may not align with current regulatory requirements. Additionally, plan decisions may have been made months prior to the interview.

SAMPLE DESIGN

The sample for the annual KFF Employer Health Benefits Survey includes private firms and nonfederal government employers with ten or more employees. The universe is defined by the U.S. Census’ 2021 Statistics of U.S. Businesses (SUSB) for private firms and the 2022 Census of Governments (COG) for non-federal public employers. At the time of sample design (December 2024), this data represented the most current information on the number of public and private firms. The sample size is determined based on the number of firms needed to achieve a target number of completes across five firm-size categories and whether the firm was located in California.

We attempted to re-interview prior survey respondents who participated in either the 2023 or 2024 survey, or both. In total,* 186 firms participated in 2023,* 423 firms participated in 2024, and* 693 firms participated in both years.

Non-panel firms were randomly selected within size and industry groups.

Since 2010, the sample has been drawn from a Dynata list (based on a census compiled by Dun & Bradstreet) of the nation’s private employers, and from the COG for public employers. Starting in 2025, we included an augmented sample of 50 firms from the Forbes America’s Largest Private Companies list. This list includes U.S.-based firms with annual revenue of $2 billion or more and is intended to complement the Dynata sample frame.

To increase precision, the sample is stratified by ten industry categories and six size categories. Education is treated as a separate category for sampling but included in the “Service” category for weighting.

For more information on changes to sampling methods over time, please consult the extended methods (https://kff.org/ehbs) which describes changes made in each year’s survey.

RESPONSE RATE

Response rates are calculated using a CASRO method, which accounts for firm eligibility in the study. The rate is computed by dividing the number of completes by the sum of refusals and the estimated number of eligible firms among those with unknown eligibility. The overall response rate is 13% [Figure M.1]. As in prior years, the response rate for panel firms is higher than for non-panel firms.

Similar to other employer and household surveys, response rates have declined over time. Since 2017, we have attempted to increase the number of completes by expanding the number of non-panel firms in the sample. While this strategy improves the precision of estimates—particularly for subgroups—it tends to reduce the overall response rate.

Most survey questions are asked only of firms that offer health benefits. A total of 1,610 of the 1,862 firms responding to the full survey indicated that they offer health benefits.

We asked one question of all firms we contacted by phone, even if they declined to complete the full survey: “Does your company offer a health insurance program as a benefit to any of your employees?” A total of 2,560 firms responded to this question, including 1,862 full survey respondents and 698 firms who responded to this question only.

These responses are included in the estimates of the percentage of firms offering health benefits presented in Chapter 2. The response rate for this question is 17.4% [Figure M.1].

Figure M.1: Response Rates for Various Subsets of the Sample, 2025

While response rates have decreased, elements of the survey design limit the potential impact of a response bias. Most major statistics are weighted by the number of covered workers at a firm. Collectively, 3,600,000 of the 67,600,000 workers covered by their own employer’s health benefits nationwide were employed at firms that completed the survey. The most important statistic weighted by the number of employers is the offer rate. Firms that do not complete the full survey are still asked whether they offer health benefits, ensuring a larger sample. As in previous years, most responding firms are very small. As a result, fluctuations in the offer rate for these small firms significantly influence the overall offer rate.

FIRM SIZES AND KEY DEFINITIONS

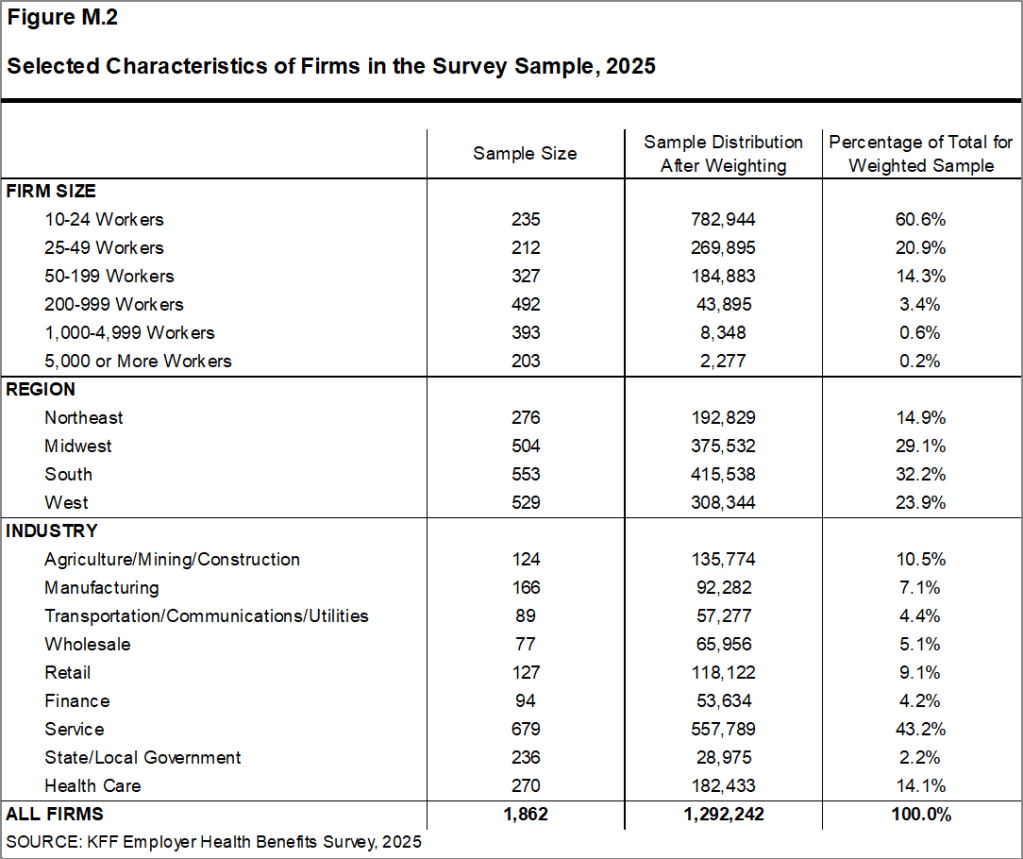

Throughout the report, we present data by firm size, region, and industry; [Figure M.2] displays selected characteristics of the sample. Unless otherwise noted, firm size is defined as follows: small firms have 10-199 workers, and large firms have 200 or more workers.

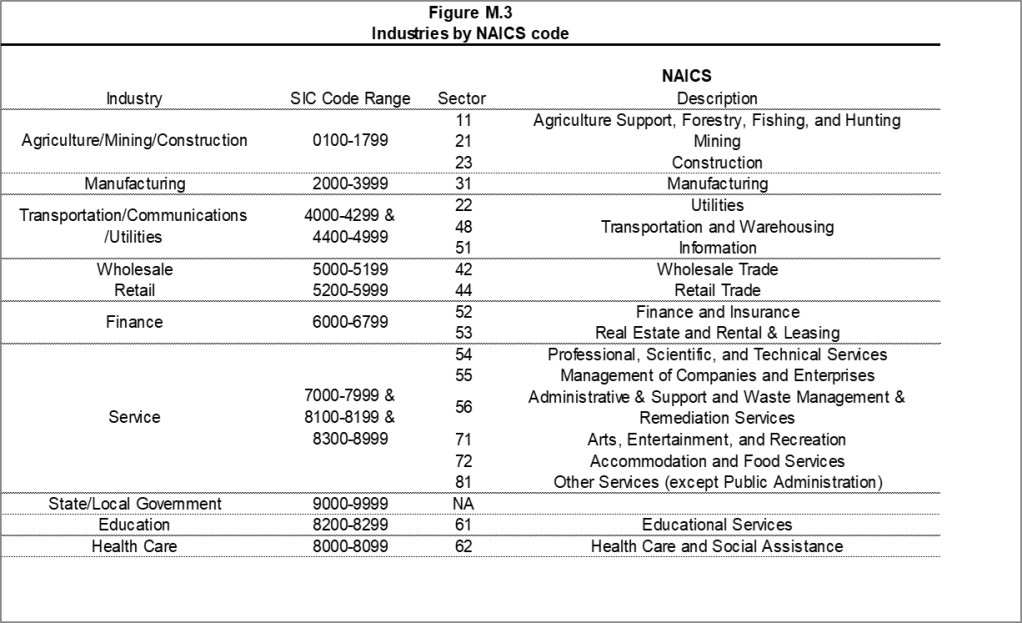

A firm’s primary industry classification is based on Dynata’s designation, which in turn is derived from the U.S. Census Bureau’s North American Industry Classification System (NAICS) [Figure M.3]. Firm ownership type, average wage level, and workforce age are based on respondents’ self-reported information.

While there is considerable overlap between firms categorized as “State/Local Government” in the industry classification and those identified as publicly owned, the two categories are not identical. For example, public school districts are included in the “Service” industry category, even though they are publicly owned.

Family coverage is defined as health insurance coverage for a family of four.

Figure M.2: Selected Characteristics of Firms in the Survey Sample, 2025

Figure M.3: Industries by NAICS code

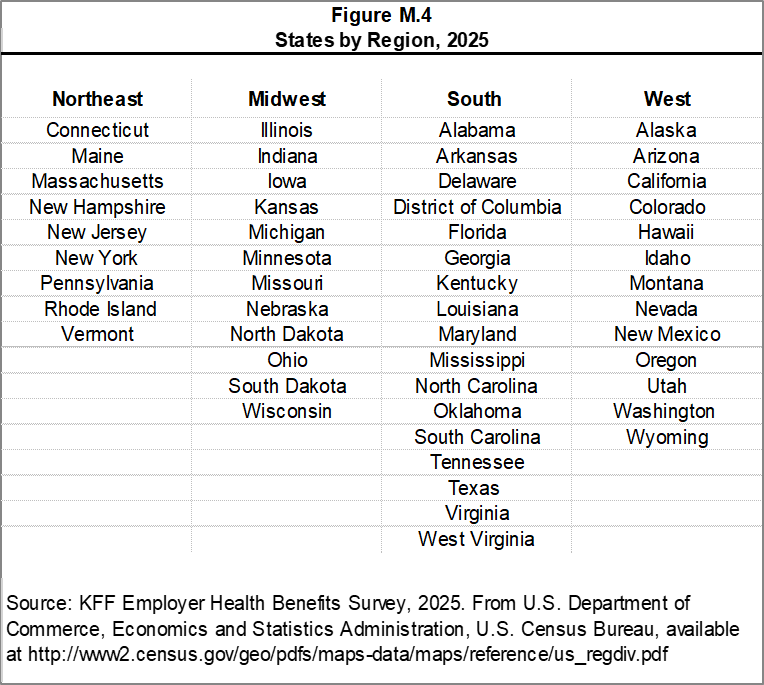

[Figure M.4] shows the categorization of states into regions, based on the U.S. Census Bureau’s regional definitions. State-level data are not reported due to limited sample sizes in many states and because the survey collects information only on a firm’s primary location—not where employees may be based. Some mid-size and large employers operate in multiple states, so the location of a firm’s headquarters may not correspond to the location of the health plan for which premium information was collected.

Figure M.4: States by Region, 2025

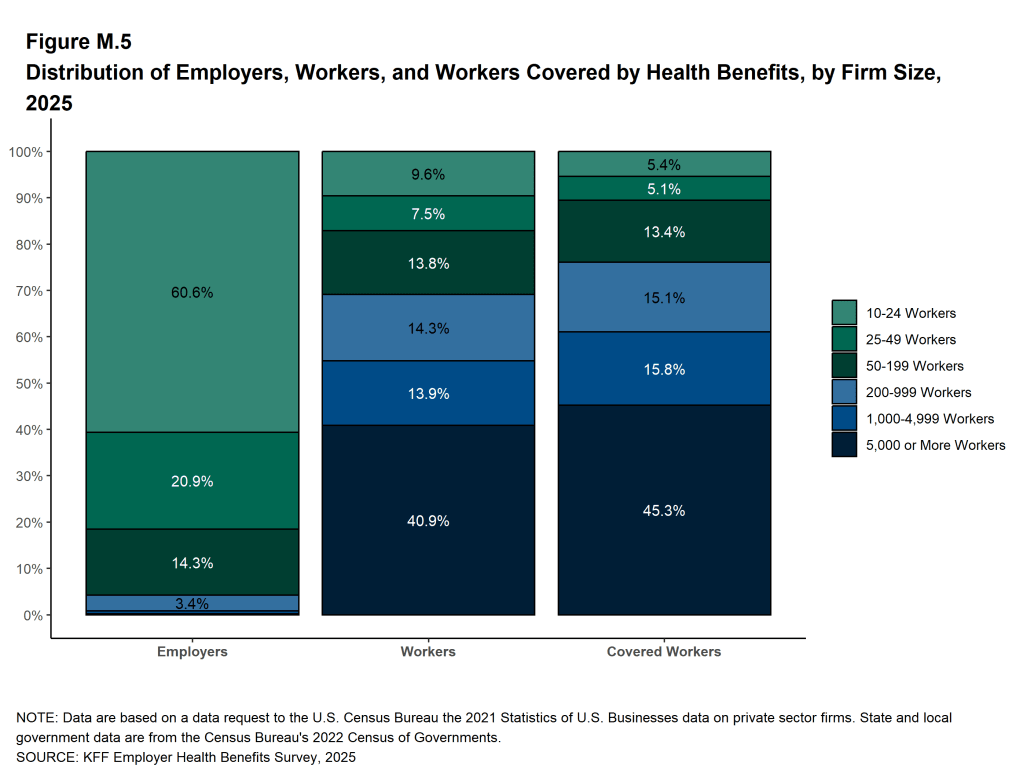

[Figure M.5] displays the distribution of the nation’s firms, workers, and covered workers (employees receiving health coverage from their employer). Beginning in 2025, firms with fewer than 10 employees were excluded from the survey universe. Although most firms in the United States are small, most workers covered by health benefits are employed at large firms: 76% of the covered worker weight is controlled by firms with 200 or more employees. Conversely, firms with 10-199 employees represent 96% percent of the employer weight.

Because small firms make up the vast majority of all firms, they heavily influence statistics weighted by the number of employers. For this reason, most firm-level statistics are reportedc by firm size. In contrast, large firms—especially those with 1,000 or more workers—have the greatest influence on statistics related to covered workers. Even with the large firm category (those with 200 or more workers), 81% of the employer weight is driven by firms with 200-999 employees.

Statistics for small firms and employer-weighted measures tend to exhibit greater variability.

Figure M.5: Distribution of Employers, Workers, and Workers Covered by Health Benefits, by Firm Size, 2025

The survey asks firms what percentage of their employees earn more or less than a specified amount in order to identify the portion of the workforce that has relatively lower or higher wages. This year, the income threshold is $37,000 or less per year for lower-wage workers and $80,000 or more for higher-wage workers. These thresholds are based on the 25th and 75th percentile of workers’ earnings as reported by the Bureau of Labor Statistics using data from the Occupational Employment Statistics (OES) (2023). The cutoffs were inflation-adjusted and rounded to the nearest thousand.

Annual inflation estimates are calculated as an average of the first three months of the year. The 12 month percentage change for this period was 2.7%. Data presented is nominal unless indicated specifically otherwise.

ROUNDING AND IMPUTATION

Some figures may not sum to totals due to rounding. While overall totals and totals by firm size and industry are statistically valid, some breakdowns are not reported due to limited sample sizes or high relative standard errors. Where the unweighted sample size is fewer than 30 observations, figures are labeled “NSD” (Not Sufficient Data). Estimates with high relative standard errors are reviewed and, in some cases, suppressed. Many subset estimates may have large standard errors, meaning that even large differences between groups may not be statistically significant.

To improve readability, values below 3% are not shown in graphical figures. The underlying data for all estimates presented in graphs are available in Excel files accompanying each section at https://kff.org/ehbs.

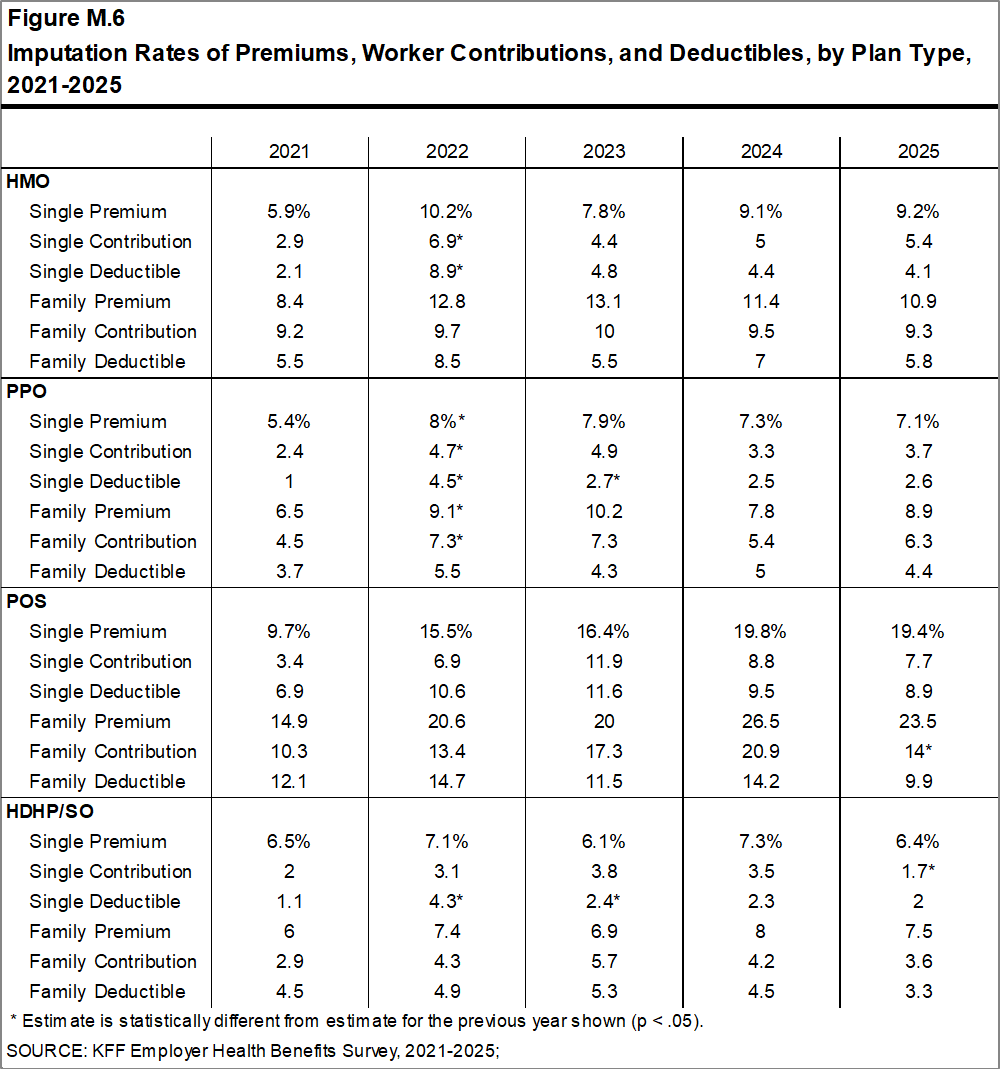

To control for item nonresponse bias, we impute missing values for most variables. On average, 10% of observations are imputed. All variables—except single coverage premiums—are imputed using a hotdeck method, which replaces missing values with observed values from a similar firm (based on size and industry).

When both single and family coverage premiums are missing for a firm, the single coverage premium is first predicted using a random forest algorithm based on other known plan and firm characteristics. This predicted value is then used to impute related variables, such as family premiums and worker contributions, using the hotdeck approach. Some variables are hotdecked based on their relationship to another variable. For example, if a firm reports a family worker contribution but not a family premium, we impute a ratio between the two and then calculate the missing premium.

In 2025, there were forty-six variables where the imputation rate exceeded 20%, most of which were related to plan-level statistics. When constructing aggregate estimates across all plans, the imputation rate is typically much lower. A few variables are not imputed—these are usually cases where a “don’t know” response is considered valid.

To ensure data quality, we conduct multiple reviews of outliers and illogical responses. Each year, several hundred firms are recontacted to verify or correct their responses. In some cases, responses are edited based on open-ended comments or established logic rules.

Figure M.6: Imputation Rates of Premiums, Worker Contributions, and Deductibles, by Plan Type, 2021-2025

WEIGHTING

Because we select firms randomly, it is possible through the use of weights to extrapolate results to national (as well as firm size, regional, and industry) averages. These weights allow us to present findings based on the number of workers covered by health plans, the number of workers, and the number of firms. In general, findings in dollar amounts (such as premiums, worker contributions, and cost sharing) are weighted by covered workers. Other estimates, such as the offer rate, are weighted by firms.

The employer weight was determined by calculating the firm’s probability of selection. This weight was trimmed of overly influential weights and calibrated to U.S. Census Bureau’s 2021 Statistics of U.S. Businesses for firms in the private sector, and the 2022 Census of Governments totals. The worker weight was calculated by multiplying the employer weight by the number of workers at the firm and then following the same weight adjustment process described above. The covered-worker weight and the plan-specific weights were calculated by multiplying the percentage of workers enrolled in each of the plan types by the firm’s worker weight. These weights allow analyses of workers covered by health benefits and of workers in a particular type of health plan.

The trimming procedure follows the following steps: First, we grouped firms into size and offer categories of observations. Within each strata, we calculated the trimming cut point as the median plus six times the interquartile range (M + [6 * IQR]). Weight values larger than this cut point are trimmed. In all instances, very few weight values were trimmed.

To account for design effects, the statistical computing package R version 4.5.1 (2025-06-13 ucrt) and the library “survey” version 4.4.8 were used to calculate standard errors.

STATISTICAL SIGNIFICANCE AND LIMITATIONS

All statistical tests are performed at the 0.05 confidence level. For figures spanning multiple years, comparisons are made between each year and the previous year shown, unless otherwise noted. No statistical tests are conducted for years prior to 1999.

Subgroup comparisons are made against all other firm sizes not included in the specified group. For example, firms in the Northeast are compared to an aggregate of firms in the Midwest, South, and West. For plan type comparisons (e.g., average premiums in PPOs), results are tested against the “All Plans” estimate. In some cases, plan-specific estimates are also compared to similar estimates for other plan types (e.g., single and family premiums in HDHP/SOs vs. HMO, PPO, and POS plans); such comparisons are noted in the text.

Two statistical tests are used: the t-test and the Wald test. A small number of observations for certain variables can result in large variability around point estimates. Readers should be cautious of these when interpreting year-to-year changes, as large shifts may not be statistically significant. Standard errors for selected estimates are available in a technical supplement at http://ehbs.kff.org.

Due to the complexity of many employer health benefit programs, the survey may not capture all elements of any given plan. For instance, employers may offer intricate and varying prescription drug benefits, premium contributions, or wellness incentives. Interviews were conducted with the individual most knowledgeable about the firm’s health benefits, though some respondents may not have complete information on all aspects of the plan. While the survey collects data on the number of workers enrolled in coverage, it does not capture the characteristics of those offered or enrolled in specific plans.

DATA COLLECTION AND SURVEY MODE

Starting in 2022, we expanded the use of computer assisted web interview (CAWI), offering most respondents the opportunity to complete the survey using an online questionnaire rather a telephone interview. In 2025, fifty-seven percent of survey responses were completed via telephone interview, and the remainder were completed online. Previous analysis has found that survey mode had little impact on major statistics such as annual premiums, contributions, and deductibles.

Preferred Reporting Items for Complex Sample Survey Analysis (PRICSSA)

In their Journal of Survey Statistics and Methodology article, Seidenberg, Moser, and West (2023) propose a checklist for survey administrators and sponsoring organizations to help external researchers quickly understand the methods used to create a complex sample dataset. The Preferred Reporting Items for Complex Sample Survey Analysis (PRICSSA) recommends a standard format to enumerate data collection and analysis techniques across a variety of different surveys. KFF has adopted this checklist to increase transparency for our readership and also to promote reproducibility among external researchers granted access to our public use files.

- 1.1 Data collection dates: January 27, 2025-July 23, 2025.

- 1.2 Data collection mode(s): fifty-seven percent computer-assisted telephone interviewing (CATI), and the remainder completed with computer assisted web interview (CAWI).

- 1.3 Target population: Private firms as well as state and local government employers with ten or more employees in 50 US states and Washington DC.

- 1.4 Sample design: A sample stratified by ten industry categories and six size categories drawn from a Dynata list (based on a census assembled by Dun and Bradstreet) of the nation’s private employers and the Census of Governments for public employers.

- 1.5 Full Survey response rate: 13 percent (CASRO method).

- 2.1 Missingness rates: On average, 10% of observations are imputed.

- 2.2 Observation deletion: Observations found to be duplicated firms, out of business, or no longer exisiting in the sample universe.

- 2.3 Sample sizes: 1,862 firms completed the entire survey, 2,560 completed at least the offer question, out of 30,150 initially sampled firms, generalizing to a total of about one million firms.

- 2.4 Confidence intervals / standard errors: All statistical tests are performed at the .05 confidence level.

- 2.5 Weighting:

empwt(firms),empwt_a6(firms, including those answering only the offer question),wkrwt(workers),covwt(policyholders),hmowt,ppowt,poswt, andhdpwt(plan weights) - 2.6 Variance estimation: Taylor Series Linearization with

newcellused as the stratum variable but no PSU variable. - 2.7 Subpopulation analysis: The R

surveypackage toolkit such assvybyand a complex sample design’ssubsetmethod allowed for most analysis of subdomains. - 2.8 Suppression rules: Where the unweighted sample size is fewer than 30 observations, figures include the notation “NSD” (Not Sufficient Data). Estimates with high relative standard errors are reviewed and in some cases not published.

- 2.9 Software and code: All design-based analyses were performed using R version 4.5.1 (2025-06-13 ucrt) and

surveylibrary version 4.4.8.

2025 SURVEY

The 2025 survey includes new questions on primary care, menopause benefits, direct contracting, specialty networks, and transparency, among other topics. As in previous years, modifications were also made to existing questions to improve clarity and reflect changes in the health care marketplace.

California Oversample

In 2025, we fielded an oversample of California-based employers to generate separate state-level estimates for the CHCF/KFF California Employer Health Benefits Survey (CHBS). KFF and the California Health Care Foundation (CHCF) have previously included California-specific questions and an oversample of firms located in the state. The 2025 California Employer Health Benefits Survey will produce estimates comparable to those in the 2022 CHBS. Firms with workers in California are included in both the 2025 EHBS and CHBS. To ensure statistical reliability at both the national and state levels, firm weights for the California sample were calibrated to state-specific targets from the U.S. Census Bureau’s Statistics of U.S. Businesses (SUSB). All firms were asked about the characteristics of their workforce nationwide and if applicable in California.

Augmented Sample

Firms with 70,000 or more employees account for 14% of workers in the United States. As a result, the accuracy of estimates depends heavily on the participation of these large employers. In recent years, however, participation among the largest firms has declined. In 2014, survey respondents included firms of this size employing about 9% of the nation’s covered workforce; by 2024, this share had fallen to 4%. While the total number of responding firms has remained relatively stable, the survey now includes fewer firms that have large workforces. Although there are likely multiple reasons for the decline in participation among large firms, one potential concern is that these firms may be underrepresented in the sample frame.

To address this issue, beginning in 2025, we implemented an augmented sample drawn from the Forbes America’s Largest Private Companies list, which includes U.S.-based firms with annual revenues of $2 billion or more. This supplemental sample was designed to enhance representation of the largest employers and complement the primary Dynata sample frame. For this augmented sample, Davis Research conducted outreach to multiple individuals at each firm, targeting staff with human resources-related titles.

Exclusion of Firms with Fewer than 10 Employees

Beginning in 2025, the survey will no longer include firms with 3 to 9 employees. This change reflects longstanding challenges in surveying the smallest firms and their limited influence on national estimates. Although there are 1.95 million such firms in the U.S., they employ a very small share of the workforce and present significant methodological difficulties.

In 2024, only 151 firms in this size range responded to the survey, and just 29 reported offering health benefits. Due to their small numbers, each responding firm carried substantial weight in employer-level estimates—on average, offering firms with 3-9 employees were weighted 58 times more heavily than larger firms. As a result, a small number of responses have disproportionate influence on employer-weighted estimates, even though these firms often had more limited knowledge of their health plans. The response rate for offering firms in this group was also significantly lower than the overall rate (6.5% vs. 14%).

At the same time, these firms have minimal impact on most covered worker-weighted estimates, such as premiums, contributions, deductibles and other cost-sharing. For example, the average family premium when including versus excluding 3-9 employee firms in 2024 differed by only $13 because they account for just 3.7% of all covered workers. For more information on the sample distribution and responses rates including firms with 3 to 9 employees see the 2024 methods section.

Given these factors — low response rates, high variability, and limited influence on key national estimates — firms with fewer than 10 employees were removed from the sample universe starting in 2025.

This change most directly affects the firm offer rate. In 2024, the offer rate among firms with 10 or more employees was 65% compared to 54% among firms with 3 or more employees. While this adjustment reduces insight into the smallest firms, it improves the precision and reliability of estimates for the remaining sample universe.

Decline in Single-Question A6 Firm Counts

After fielding the 2025 survey, we discovered a skip pattern mistake that led to a sharp reduction in the number of firms refusing the full survey but responding to the question “Does your company offer a health insurance program as a benefit to any of your employees?” In the past few years, more than 2,000 firms have answered only this question but not the full survey; however, the error reduced this segment’s 2025 unweighted sample to only about 700 firms. Although this oversight decreased the precision of our 2025 offer rate estimates, we reviewed the questionnaire pathways and do not believe to have introduced bias in the manner of data collection. Both including and excluding these additional firms yielded the same percentage point estimates both last year and this year: 65% in 2024 and 61% in 2025. This oversight also reduced our 2025 combined response rate to 17% compared to 30% last year, since fewer eligible firms were given an opportunity to answer this standalone question. (The 2024 Table M.1 shows 31% including firms with 3-9 employees.) We expect to remedy this issue in the 2026 setup and hope to collect single-question information from a larger pool of firms as consistent with recent years.

OTHER RESOURCES

Additional information about the Employer Health Benefits Survey is available at https://kff.org/ehbs, including a Health Affairs article, an interactive graphic, and historical reports. Researchers may also request access to a public use dataset at https://www.kff.org/contact-us/.

The Survey Design and Methods section on our website includes an extended methodology document that is not available in the PDF or printed versions of this report. Readers interested in more detailed information on survey methods should consult the online edition.

Published: October 22, 2025. Last Updated: October 16, 2025.

HISTORICAL DATA

Data in this report focus primarily on findings from surveys conducted and authored by KFF since 1999. Between 1999 and 2017, the Health Research & Educational Trust (HRET) co-authored this survey. HRET’s divestiture had no impact on our survey methods, which remain the same as years past. Prior to 1999, the survey was conducted by the Health Insurance Association of America (HIAA) and KPMG using a similar survey instrument, but data are not available for all the intervening years. Following the survey’s introduction in 1987, the HIAA conducted the survey through 1990, but some data are not available for analysis. KPMG conducted the survey from 1991-1998. However, in 1991, 1992, 1994, and 1997, only larger firms were sampled. In 1993, 1995, 1996, and 1998, KPMG interviewed both large and small firms. In 1998, KPMG divested itself of its Compensation and Benefits Practice, and part of that divestiture included donating the annual survey of health benefits to HRET.

This report uses historical data from the 1993, 1996, and 1998 KPMG Surveys of Employer-Sponsored Health Benefits and the 1999-2017 Kaiser/HRET Survey of Employer-Sponsored Health Benefits. For a longer-term perspective, we also use the 1988 survey of the nation’s employers conducted by the HIAA, on which the KPMG and KFF surveys are based. The survey designs for the three surveys are similar.

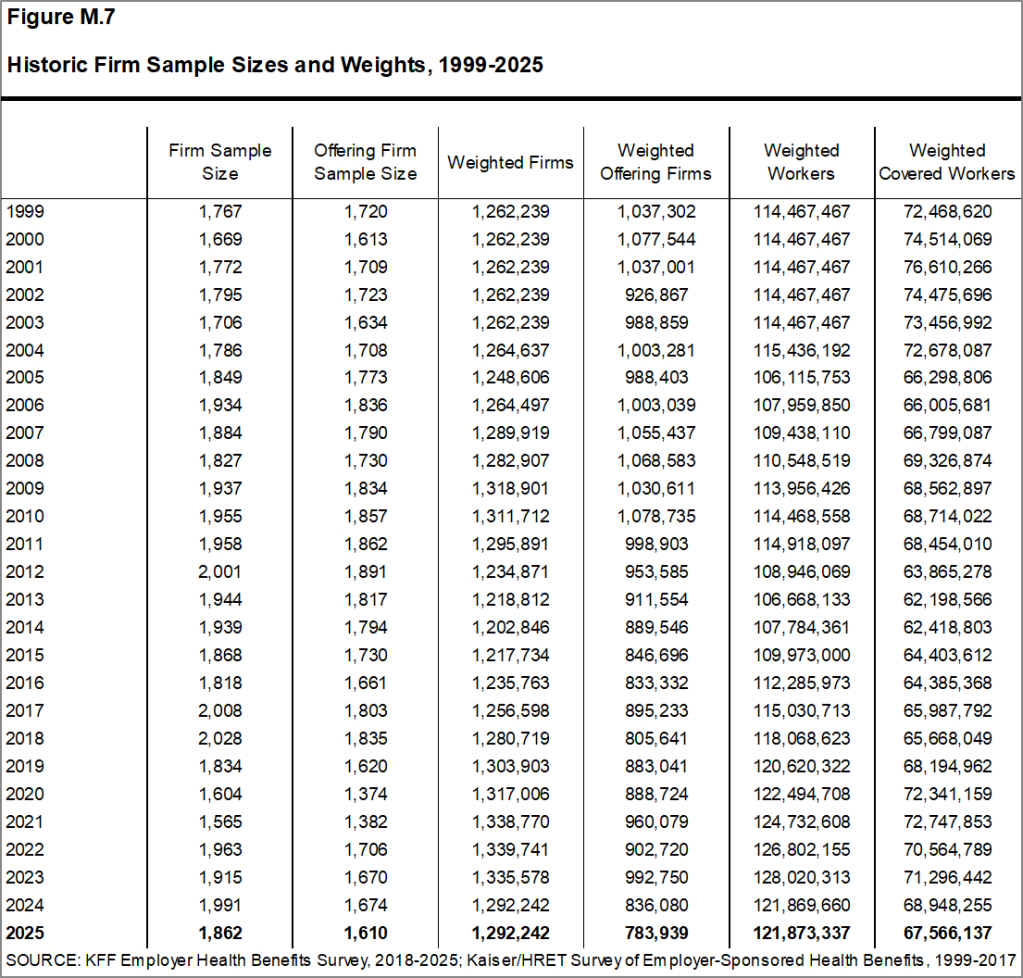

[Figure M.7] displays the historic sample sizes and weights of firms, workers, and covered workers (employees receiving coverage from their employer).

Figure M.7: Historic Firm Sample Sizes and Weights, 1999-2025

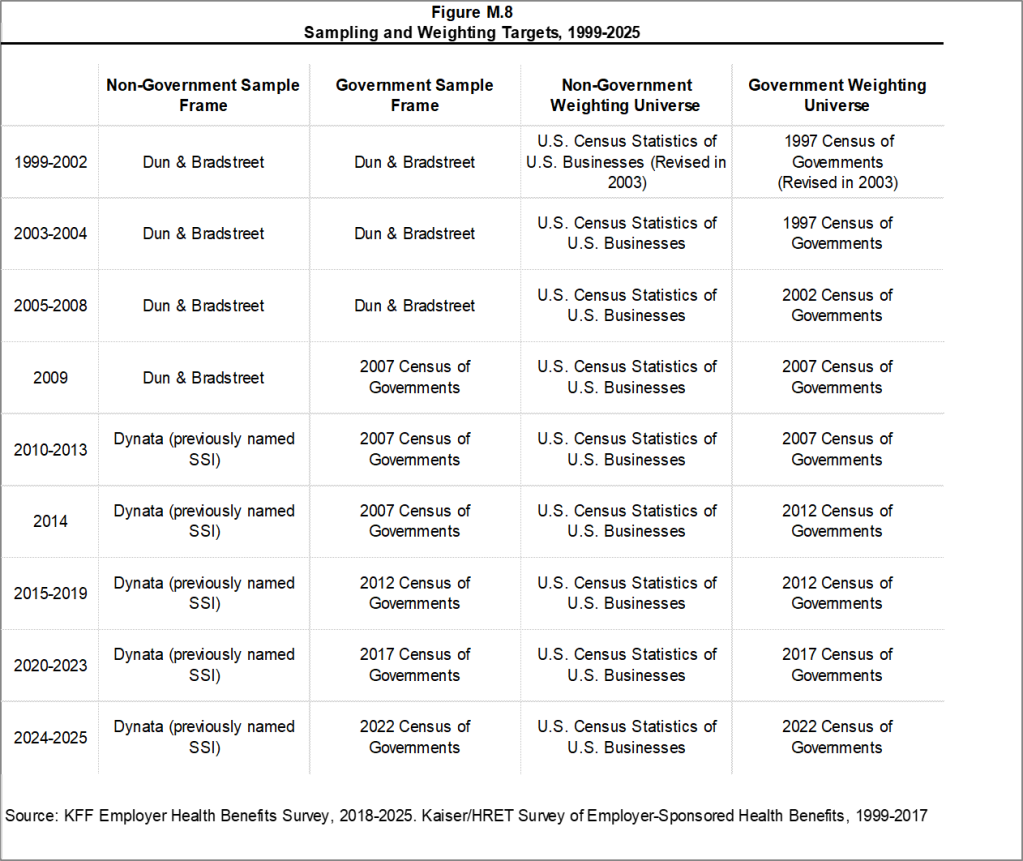

[Figure M.8] displays the historic sample frames and weighting universes.

Figure M.8: Sampling and Weighting Targets, 1999-2025

1999

The Kaiser Family Foundation and The Health Research and Educational Trust (Kaiser/HRET) began sponsoring the survey of employer-sponsored health benefits supported for many years by KPMG Peat Marwick LLP, an international consulting and accounting firm. In 1998, KPMG divested itself of its Compensation and Benefits Practice, and donated the annual survey of health benefits to HRET, a non-profit research organization affiliated with the American Hospital Association. From 1999 until 2017, the survey was conducted under a partnership between HRET and The Kaiser Family Foundation, a health care philanthropy and policy research organization that is not affiliated with Kaiser Permanente or Kaiser Industries. Starting in 1999, survey continued a core set of questions from prior KPMG surveys, but was expanded to include small employers and a variety of policy-oriented questions. Some reports include data from the 1993, 1996 and 1998 KPMG Surveys of Employer-Sponsored Health Benefits. For a longer-term perspective, we also use the 1988 survey of the nation’s employers conducted by the Health Insurance Association of America (HIAA), on which the KPMG, Kaiser/HRET, and Kaiser Family Foundation surveys were based. Many of the questions in the HIAA, KPMG, Kaiser/HRET, and Kaiser Family Foundation surveys are identical, as is the sample design. Since Point-of-Service (POS) plans did not exist in 1988, reports do not include statistics for this plan type in that year. Starting in 1999, Kaiser/HRET drew its sample from a Dun & Bradstreet list of the nation’s private and public employers with three or more workers. To increase precision, Kaiser/HRET stratified the sample by industry and the number of workers in the firm. Kaiser/HRET attempted to repeat interviews with many of the 2,759 firms interviewed in 1998 and replaced non-responding firms with another firm from the same industry and firm size. As a result, 1,377 firms in the 1999 total sample of 1,939 firms participated in both the 1998 and 1999 surveys.

For more detail about the 1999 survey, see the Survey Methodology section of that year’s report.

2000

Kaiser/HRET attempted to repeat interviews with many of the 1,939 firms interviewed in 1999 and replaced non-responding firms with other firms of the same industry and firm size. As a result, 982 firms in the 2000 survey’s total sample of 1,887 firms participated in both the 1999 and 2000 surveys. The overall response rate was 45% down from 60% in 1999. Contributing to the declining response rate was the decision not to re-interview any firms with 3-9 workers who participated in the 1999 survey. In 1999, the survey weights had instead been adjusted to control for the fact that firms with 3-9 workers that are in the panel (responded in either 1998 or 1999) are biased in favor of offering a health plan. The response rate in 2000 for firms with 3-9 workers was 30%.

For more detail about the 2000 survey, see the Survey Methodology section of that year’s report.

2001

For more detail about the 2001 survey, see the Survey Methodology section of that year’s report.

2002

The list of imputed variables was greatly expanded in 2002 to also include self-insurance status, level of benefits, prescription drug cost-sharing, copay and coinsurance amounts for prescription drugs, and firm workforce characteristics such as average income, age and part-time status. On average, 2% of these observations are imputed for any given variable. The imputed values are determined based on the distribution of the reported values within stratum defined by firm size and region.

For more detail about the 2002 survey, see the Survey Methodology section of that year’s report.

2003

The calculation of the weights followed a similar approach to previous years, but with several notable changes in 2003. First, as in years past, the basic weight was determined, followed by a nonresponse adjustment added this year to reflect the fact that small firms that do not participate in the full survey are less likely to offer health benefits and, consequently, are unlikely to answer the single offer rate question. To make this adjustment, Kaiser/HRET conducted a follow-up survey of all firms with 3-49 workers that did not participate in the full survey. Each of these 1,744 firms was asked the single question, “Does your company offer or contribute to a health insurance program as a benefit to its employees?” The main difference between this follow-up survey and the original survey is that in the follow-up survey the first person who answered the telephone was asked whether the firm offered health benefits, whereas in the original survey the question was asked of the person who was identified as most knowledgeable about the firm’s health benefits. Conducting the follow-up survey accomplished two objectives. First, statistical techniques (a McNemar analysis which was confirmed by a chi-squared test) demonstrated that the change in method-speaking with the person answering the phone rather than a benefits manager-did not bias the results of the follow-up survey. Analyzing firms who responded to the offer question twice, in both the original and follow-up survey, proved that there was no difference in the likelihood that a firm offers coverage based on which employee answered the question about whether a firm offers health benefits. Second, the follow-up survey demonstrated that very small firms not offering health benefits to their workers are less likely to answer the one survey question about coverage. Kaiser/HRET analyzed the group of firms that only responded to the follow-up survey and performed a t-test between the firms who had responded to the initial survey as well as the follow-up, and those who only responded to the follow-up. Tests confirmed the hypothesis that the firms that did not answer the single offer rate question in the original survey were less likely to offer health benefits. To adjust the offer rate data for this finding an additional non-response adjustment was applied to increase the weight of firms in the sample that do not offer coverage. The second change to the weighting method in 2003 was to trim the weights in order to reduce the influence of weight outliers. On occasion one or two firms will, through the weighting process, represent a highly disproportionate number of firms or covered workers. Rather than excluding these observations from the sample, a set cut point that would minimize the variances of several key variables (such as premium change and offer rate) was determined. The additional weight represented by outliers is then spread among the other firms in the same sampling cell. Finally, a post-stratification adjustment was applied. In the past, Kaiser/HRET was poststratified back to the Dun & Bradstreet frequency counts. Concern over volatility of counts in recent years led to the use of an alternate source for information on firm and industry data. This year the survey uses the recently released Statistics of U.S. Businesses conducted by the U.S. Census as the basis for the post-stratification adjustment. These Census data indicate the percentage of the nation’s firms with 3-9 workers is 59% rather than the higher percentages (e.g., 76% in 2002) derived from Dun & Bradstreet’s national database. This change has little impact on worker-based estimates, since firms with 3-9 workers accounted for less than 10% of the nation’s workforce. The impact on estimates expressed as a percentage of employers (e.g., the percent of firms offering coverage), however, may be significant. Due to these changes, Kaiser/HRET recalculated the weights for survey years 1999-2002 and modified estimates published in the survey where appropriate. The vast majority of these estimates are not statistically different. However, please note that the survey data published starting in 2003 varies slightly from previously published reports.

For more detail about the 2003 survey, see the Survey Methodology section of that year’s report.

2004

For more detail about the 2004 survey, see the Survey Methodology section of that year’s report.

2005

In 2005, the Kaiser/HRET survey added two additional sections to the questionnaire to collect information about high-deductible health plans (HDHP) that are offered along with a health reimbursement account (HRA) or are health savings account (HSA) qualified. Questions in these sections were asked of all firms offering these plan types, regardless of enrollment. Specific weights were also created to analyze the HDHP plans that are offered along with HRAs or are HSA qualified. These weights represent the proportion of employees enrolled in each of these arrangements.

We updated our data to reflect the 2002 Census of Governments. We also removed federal government employee counts from our post-stratification.

For more detail about the 2005 survey, see the Survey Methodology section of that year’s report.

2006

For the first time in 2006, Kaiser/HRET asked questions about the highest enrollment HDHP/SO as a separate plan type, equal to the other plan types. In prior years, data on HDHP/SO plans were collected as part of one of the other types of plans. Therefore, the removal of HDHP/SOs from the other plan types may affect the year to year comparisons for the other plan types. Given the decline in conventional health plan enrollment and the addition of HDHP/SO as a plan type option, Kaiser/HRET eliminated nearly all questions pertaining to conventional coverage from the survey instrument. We continue to ask firms whether or not they offer a conventional health plan and, if so, how much their premium for conventional coverage increased in the last year, but respondents are not asked additional questions about the attributes of the conventional plans they offer. Because we have limited information about conventional health plans, we must make adjustments in calculating all plan averages or distributions. In cases where a firm offers only conventional health plans, no information from that respondent is included in all plan averages. The exception is for the rate of premium growth, for which we have information. If a firm offers a conventional health plan and at least one other plan type, for categorical variables we assign the values from the health plan with the largest enrollment (other than the conventional plan) to the workers in the conventional plan. In the case of continuous variables, covered workers in conventional plans are assigned the weighted average value of the other plan types in the firm.

The survey newly distinguished between plans that have an aggregate deductible amount in which all family members’ out-of-pocket expenses count toward the deductible and plans that have a separate amount for each family member, typically with a limit on the number of family members required to reach that amount.

In 2006, Kaiser/HRET began asking employers if they had a health plan that was an exclusive provider organization (EPO). We treat EPOs and HMOs together as one plan type and report the information under the banner of “HMO”; if an employer sponsors both an HMO and an EPO, they are asked about the attributes of the plan with the larger enrollment.

Kaiser/HRET made a slight change to one of the industry groups: we removed Wholesale from the group that also included Agriculture, Mining and Construction. The nine industry categories now reported are: Agriculture/Mining/Construction, Manufacturing, Transportation/Communications/Utilities, Wholesale, Retail, Finance, Service, State/Local Government, and Health Care.

Starting in 2006, we made an important change to the way we test the subgroups of data within a year. Statistical tests for a given subgroup (firms with 25-49 workers, for instance) are tested against all other firm sizes not included in that subgroup (all firm sizes NOT including firms with 25-49 workers in this example). Tests are done similarly for region and industry: Northeast is compared to all firms NOT in the Northeast (an aggregate of firms in the Midwest, South, and West). Statistical tests for estimates compared across plan types (for example, average premiums in PPOs) are tested against the “All Plans” estimate. In some cases, we also test plan specific estimates against similar estimates for other plan types (for example, single and family premiums for HDHP/SOs against single and family premiums in HMO, PPO, and POS plans). Those are noted specifically in the text. This year, we also changed the type of Chi-square test from the Chi-square test for goodness-of-fit to the Pearson Chi-square test. Therefore, in 2006, the two types of statistical tests performed are the t-test and the Pearson Chi-square test.

For more detail about the 2006 survey, see the Survey Methodology section of that year’s report.

2007

Kaiser/HRET drew its sample from a Survey Sampling Incorporated list (based on an original Dun and Bradstreet list) of the nation’s private and public employers with three or more workers.

In prior years, many variables were imputed following a hotdeck approach, while others followed a distributional approach (where values were randomly determined from the variable’s distribution, assuming a normal distribution). This year, all variables are imputed following a hotdeck approach. This imputation method does not rely on a normal distribution assumption and replaces missing values with observed values from a firm with similar characteristics, in this case, size and industry. Due to the low imputation rate for most variables, the change in methodology is not expected to have a major impact on the results. In some cases, due to small sample size, imputed outliers are excluded. There are a few variables that Kaiser/HRET has decided should not be imputed; these are typically variables where “don’t know” is considered a valid response option (for example, firms’ opinions about effectiveness of various strategies to control health insurance costs).

The survey now contains a few questions on employee cost sharing that are asked only of firms that indicate in a previous question that they have a certain cost-sharing provision. For example, the copayment amount for prescription drugs is asked only of those that report they have copayments for prescription drugs. Because the composite variables are reflective of only those plans with the provision, separate weights for the relevant variables were created in order to account for the fact that not all covered workers have such provisions.

For more detail about the 2007 survey, see the Survey Methodology section of that year’s report.

2008

National Research, LLC (NR), our Washington, D.C.-based survey research firm, introduced a new CATI (Computer Assisted Telephone Interview) system at the end of 2007, and, due to several delays in the field, obtained fewer responses than expected. As a result, an incentive of $50 was offered during the final two and a half weeks the survey was in the field. Kaiser/HRET compared the distribution of key variables between firms receiving the incentive and firms not receiving the incentive to determine any potential bias. Chi-square test results were not significant, suggesting minimal to no bias.

In 2008, we changed the method used to report the annual percentage premium increase. In prior years, the reported percentage was based on a series of questions that asked responding firms the percentage increase or decrease in premiums from the previous year to the current year for a family of four in the largest plan of each plan type (e.g., HMO, PPO). The reported premium increase was the average of the reported percentage changes (i.e., 6.1% for 2007) weighted by covered workers. This year, we calculate the overall percentage increase in premiums from year to year for family coverage using the average of the premium dollar amounts for a family of four in the largest plan of each plan type reported by respondents and weighted by covered workers (i.e., $12,106 for 2007 and $12,680 for 2008, an increase of 5%). A principal advantage of using the premium dollar amounts to calculate the annual change in premiums is that we are better able to capture changes in the cost of health insurance for those firms that are newly in the market or that change plan types, especially those that move to plans with very different premium levels. For example, in the first year that a firm offers a plan of a new plan type, such as a consumer-directed plan, the firm can report the level of the premium they paid, but using the previous method would be unable to report the rate of change from the previous year since the plan was not previously offered. If the premium for the new plan is relatively low compared to other premiums in the market, the relatively low premium amount that the firm reports will tend to lower the weighted average premium dollar amount reported in the survey, but the firm responses would not provide any information to the percentage premium increase question. Another advantage of using premium dollar amounts to examine trends is that these data directly relate to the other findings in the survey and better address a principal public policy issue (i.e., what was the change in the cost of insurance over some past period). Many users noted, for example, that the percentage change calculated from the reported premium dollar amounts between two years did not directly match the reported average premium increase for the same period. There are several reasons why we would not expect these questions to produce identical results: 1) they are separate questions subject to varying degrees of reporting error, 2) firms could report a premium dollar amount for a plan type they might not have offered in the previous year, therefore, contributing information to one measure but not the other, or 3) firms could report a premium dollar amount for a plan that was not the largest plan of that type in the previous year. Although the two approaches have generated similar results in terms of the long-term growth rate of overall family premiums, there are greater discrepancies in trends for subgroups like small employers and self-funded firms. Focusing on the dollar amount changes over time will provide a more reliable and consistent measure of premium change that also is more sensitive to firms offering new plan options.

As we have in past years, this year we collected information on the cost-sharing provisions for hospital admissions and outpatient surgery that is in addition to any general annual plan deductible. However, for the 2008 survey, we changed the structure of the question and now include “separate annual deductible or hospital admissions” as a response option rather than collecting the information through a separate question. We continue to examine and sometimes modify the questions on hospital and outpatient surgery cost sharing because this can be a complex component of health benefit plans. For example, for some plans it is difficult to distinguish a separate hospital deductible from one categorized as a general annual deductible, where office visits and preventive care are covered and the deductible only applies to hospital use. Because this continues to be a point of confusion, we continue to refine the series of questions in order to clearly convey the information we are attempting to collect from respondents.

As in 2007, we asked firms if they offer health benefits to opposite-sex or same-sex domestic partners. However, this year, we changed the response options because during early tests of the 2008 survey, several firms noted that they had not encountered the issue yet, indicating that the responses of “yes,” “no,” and “don’t know” were insufficient. Therefore, this year we added the response option “not applicable/not encountered” to better capture the number of firms that report not having a policy on the issue.

For more detail about the 2008 survey, see the Survey Methodology section of that year’s report.

2009

In the fall of 2008, with guidance from experts in survey methods and design from NORC, we reviewed the methods used for the survey. As a result of this review, several important modifications were made to the 2009 survey, including the sample design and questionnaire. For the first time, this year we determined the sample requirements based on the universe of firms obtained from the U.S. Census rather than Dun and Bradstreet. Prior to the 2009 survey, the sample requirements were based on the total counts provided by Survey Sampling Incorporated (SSI) (which obtains data from Dun and Bradstreet). Over the years, we have found the Dun and Bradstreet frequency counts to be volatile because of duplicate listings of firms, or firms that are no longer in business. These inaccuracies vary by firm size and industry. In 2003, we began using the more consistent and accurate counts provided by the Census Bureau’s Statistics of U.S. Businesses and the Census of Governments as the basis for post-stratification, although the sample was still drawn from a Dun and Bradstreet list. In order to further address this concern at the time of sampling, we now also use Census data as the basis for the sample. This change resulted in shifts in the sample of firms required in some size and industry categories.

This year, we also defined Education as a separate sampling category, rather than as a subgroup of the Service category. In the past, Education firms were a disproportionately large share of Service firms. Education is controlled for during post-stratification, and adjusting the sampling frame to also control for Education allows for a more accurate representation of both Education and Service industries.

In past years, both private and government firms were sampled from the Dun and Bradstreet database. For the 2009 sample, Government firms were sampled in-house from the 2007 Census of Governments. This change was made to eliminate the overlap of state agencies that were frequently sampled from the Dun and Bradstreet database. Each year the survey attempts to repeat interviews with respondents from past years (see “Response Rate” section below), and in order to maintain government firms that had completed the survey in the past (firms that have completed the survey in the past are known as panel firms), government firms from the 2008 survey were matched to the Census of Governments to identify phone numbers. All panel government firms were included in the sample (resulting in an oversample). In addition, the sample of private firms is screened for firms that are related to state/ local governments, and if these firms are identified in the Census of Governments, they are reclassified as government firms and a private firm is randomly drawn to replace the reclassified firm. These changes to the sample frame resulted in an expected slight reduction in the overall response rate, since there were shifts in the number of firms needed by size and industry. Therefore, the data used to determine the 2009 Employer Health Benefits sample frame include the U.S. Census’ 2005 Statistics of U.S. Businesses and the 2007 Census of Governments. At the time of the sample design (December 2008), these data represented the most current information on the number of public and private firms nationwide with three or more workers. As in the past, the post- stratification is based on the most up-to-date Census data available (the 2006 update to the Census of U.S. Businesses was purchased during the survey field period) and the 2007 Census of Governments. The Census of Governments is conducted every five years, and this is the first year the data from the 2007 Census of Governments have been available for use.

Based on recommendations from cognitive researchers at NORC and internal analysis of the survey instrument, a number of questions were revised to improve the clarity and flow of the survey in order to minimize survey burden. For example, in order to better capture the prevalence of combinations of inpatient and outpatient surgery cost sharing, the survey was changed to ask a series of yes or no questions. Previously, the question asked respondents to select one response from a list of types of cost sharing, such as separate deductibles, copayments, coinsurance, and per diem payments (for hospitalization only). We have also expanded the number of questions for which respondents can provide either the number of workers or the percentage of workers. Previously, after obtaining the total number of employees, the majority of questions asked about the percentage of workers with certain characteristics. Now, for questions such as the percentage of workers making $23,000 a year or less or the enrollment of workers in each plan type, respondents are able to respond with either the number or the percentage of workers. Few of these changes have had any noticeable impact on responses.

For more detail about the 2009 survey, see the Survey Methodology section of that year’s report.

2010

New topics in the 2010 survey include questions on eligibility for dependent coverage, coverage for care received at retail clinics, health plan changes as a result of the Mental Health Parity and Addiction Equity Act of 2008, and disease management. As in past years, this year’s survey included questions on the cost of health insurance, offer rates, coverage, eligibility, enrollment patterns, premiums, employee cost sharing, prescription drug benefits, retiree health benefits, wellness benefits, and employer opinions.

Firms in the sample with 3-49 workers that did not complete the full survey are contacted and asked (or re-asked in the case of firms that previously responded to only one question about offering benefits) whether or not the firm offers health benefits. As part of the process, we conduct a McNemar test to verify that the results of the follow-up survey are comparable to the results from the original survey. If the test indicates that the results are comparable, a nonresponse adjustment is applied to the weights used when calculating firm offer rates. This year, for the first time since we began conducting the follow-up survey, the test indicated that the results from those answering the one question about offering health benefits in the original survey and those answering the follow-up survey were different (statistically significant difference at the p<0.05 level between the two surveys), suggesting the results are not comparable. Therefore, we did not use the results of this follow-up survey to adjust the weights as we have in the past. In the past, the nonresponse adjustment lowered the offer rate for smaller firms by one to three percentage points, so not making the adjustment this year makes the offer rate look somewhat higher when making comparisons to prior years. For 2010, we saw a very large and unexpected increase in the offer rate (from 60 percent in 2009 to 69 percent in 2010) overall and particularly for firms with 3 to 9 workers (from 46 percent in 2009 to 59 percent in 2010). While not making the adjustment this year added to the size of the change, there would have been a large and difficult to explain change even if a nonresponse adjustment comparable to previous years had been made.

For more detail about the 2010 survey, see the Survey Methodology section of that year’s report.

2011

New topics in the 2011 survey include questions on stoploss coverage for self-funded plans, cost sharing for preventive care, plan grandfathering resulting from the Affordable Care Act (ACA), and employer awareness of tax credits authorized under the ACA.

This year, we became aware that the way we have been using the data from the Census Bureau for calibration was incorrect and resulted in an over-count of the actual number of firms in the nation. Specifically, firms operating in more than one industry were counted more than once in computing the total firm count by industry, and firms with establishments were counted more than once in computing the total firm count by state (which affects the regional count). Because smaller firms are less likely to operate in more than one industry or state, the miscounts occurred largely for larger from sizes. The error affects only statistics that are weighted by the number of firms (such as the percent of firms offering health benefits or sponsoring a disease management plan). Statistics that are weighted by the number of workers or covered workers (such as average premiums, contributions, or deductibles) were not affected. We addressed this issue by proportionally distributing the correct national total count of firms within each firm size as provided by the U.S. Census Bureau across industry and state based on the observed distribution of workers. This effectively weights each firm within each category (industry or state) in proportion to its share of workers in that category. The end result is a synthetic count of firms across industry and state that sums to the national totals. Firm-weighted estimates resulting from this change show only small changes from previous estimates, because smaller firms have much more influence on national estimates. For example, the estimate of the percentage of firms offering coverage was reduced by about .05 percentage points in each year (in some years no change is evident due to rounding). Estimates of the percentage of large firms offering retiree benefits were reduced by a somewhat larger amount (about 2 percentage points). Historical estimates used in the 2011 survey release have been updated following this same process. As noted above, worker-weighted estimates from prior years were not affected by the miscount and remain the same.

For more detail about the 2011 survey, see the Survey Methodology section of that year’s report.

2012

New topics in the 2012 survey include the use of biometric screening, domestic partner benefits, and emergency room cost sharing. In addition, many of the questions on health reform included in the 2011 survey were retained, including stoploss coverage for self-funded plans, cost sharing for preventive care, and plan grandfathering resulting from the Affordable Care Act (ACA).

There are several variables in which missing data is calculated based on respondents’ answers to other questions (for example, when missing employer contributions to premiums is calculated from the respondent’s premium and the ratio of contributions to premiums). In 2012 the method to calculate missing premiums and contributions was revised; if a firm provides a premium for single coverage or family coverage, or a worker contribution for single coverage or family coverage, that information was used in the imputation. For example, if a firm provided a worker contribution for family coverage but no premium information, a ratio between the family premium and family contribution was imputed and then the family premium was calculated. In addition, in cases where premiums or contributions for both family and single coverage were missing, the hotdeck procedure was revised to draw all four responses from a single firm. The change in the imputation method did not make a significant impact on the premium or contribution estimates.

In 2012, the method for calculating the size of the sample was adjusted. Rather than using a combined response rate for panel and non-panel firms, separate response rates were used to calculate the number of firms to be selected in each strata. In addition, the mining stratum was collapsed into the agriculture and construction industry grouping. In sum, changes to the sampling method required more firms to be included and may have reduced the response rate in order to provide more balanced power within each strata.

To account for design effects, the statistical computing package R and the library package “survey” were used to calculate standard errors. All statistical tests are performed at the .05 level, unless otherwise noted. For figures with multiple years, statistical tests are conducted for each year against the previous year shown, unless otherwise noted. No statistical tests are conducted for years prior to 1999. In 2012 the method to test the difference between distributions across years was changed to use a Wald test which accounts for the complex survey design. In general this method was more conservative than the approach used in prior years.

In 2012, average coinsurance rates for prescription drugs, primary care office visits, specialty office visits, and emergency room visits include firms that have a minimum and/or maximum attached to the rate. In years prior to 2012 we did not ask firms the structure of their coinsurance rate. For most prescription drug tiers, and most services, the average coinsurance rate is not statically different depending on whether the plan has a minimum or maximum.

In 2012 the calculation of the response rates was adjusted to be slightly more conservative than previous years.

For more detail about the 2012 survey, see the Survey Methodology section of that year’s report.

2013

Starting in 2013, information on conventional plans was collected under the PPO section and therefore the covered worker weight was representative of all plan types.

For more detail about the 2013 survey, see the Survey Methodology section of that year’s report.

2014

Starting in 2014, we elected to estimate separate single and family coverage premiums for firms that provided premium amounts as the average cost for all covered workers, instead of differentiating between single and family coverage. This method more accurately accounted for the portion that each type of coverage contributes to the total cost for the 1 percent of covered workers who are enrolled at firms affected by this adjustment.

Several provisions of the ACA took effect on January 1, 2014 which impacted non-grandfathered plans as well as plans renewing in calendar year 2014, such as the requirement to have an out of pocket limit and a waiting period of not more than three months. As a result, firms with non-grandfathered plans that reported that they did not have out-of-pocket limits, or waiting periods exceeding three months, were contacted during our data-confirmation calls. We did not have information on the month in which a firm’s plan or plans was renewed. Many of these firms indicated that they had a plan year starting prior to January 2014, so these ACA provision were not yet in effect for these plans.

Firms with 200 or more workers were asked: “Does your firm offer health benefits for current employees through a private or corporate exchange? A private exchange is one created by a consulting firm or an insurance company, not by either a federal or state government. Private exchanges allow employees to choose from several health benefit options offered on the exchange.” Employers were still asked for plan information about their HMO, PPO, POS and HDHP/SO plan regardless of whether they purchased health benefits through a private exchange or not.

Beginning in 2014, we collected whether firms with a non-final disposition code (such as a firm that requested a callback at a later time or date) offered health benefits. By doing so we attempt to mitigate any potential non-response bias of firms either offering or not offering health benefits on the overall offer rate statistic.

For more detail about the 2014 survey, see the Survey Methodology section of that year’s report.

2015

To increase response rates, firms with 3-9 employees were offered an incentive of $75 in cash or as a donation to a charity of their choice to complete the full survey.

In 2015, weights were not adjusted using the nonresponse adjustment process described in previous years’ methods. As in past years, Kaiser/HRET conducted a small follow-up survey of those firms with 3 to 49 workers that refused to participate in the full survey. Based on the results of a McNemar test, we were not able to verify that the results of the follow-up survey were comparable to the results from the original survey. In 2010, the results of the McNemar test were also significant and we did not conduct a nonresponse adjustment.

The 2015 survey contains new information in several areas, including on wellness and biometric screening. In most cases, information reported in this section is not comparable with previous years’ findings. Data presented in the 2015 report reflect the firm’s benefits at the time they completed the interview. Some firms may report on a plan which took effect in the prior calendar year. Starting in 2015, firms were able to have a contribution and deductible in compliance with HSA requirements for the plan year.

Starting in 2015, employers were asked how many full-time equivalents they employed. In cases in which the number of full-time equivalents was relevant to the question, interviewer skip patterns may have depended on the number of FTEs.

In cases where a firm had multiple plans, they were asked about their strategies for containing the cost of specialty drugs for the plan with the largest enrollment.

Under the Affordable Care Act, non-grandfathered plans are required to have an out-of- pocket maximum. Non-grandfathered plans who indicated that they did not have an out of pocket maximum were asked to confirm whether their plan was grandfathered and whether that plan had an out-of-pocket maximum.

For more detail about the 2015 survey, see the Survey Methodology section of that year’s report.

2016

Between 2015 and 2016, we conducted a series of focus groups that led us to the conclusion that human resource and benefit managers at firms with between 20 and 49 employees think about health insurance premiums more similarly to benefit managers at smaller firms than larger firms. Therefore, starting in 2016, we altered the health insurance premium question pathway for firms with between 20-49 employees to match that of firms with 3-19 employees rather than firms with 50 or more employees. This change affected firms representing 8% of the total covered worker weight. We believe that these questions produce comparable responses and that this edit does not create a break in trend.

Starting in 2016, we made significant revisions to how the survey asks employers about their prescription drug coverage. In most cases, information reported in the Prescription Drug Benefits section is not comparable with previous years’ findings. First, in addition to the four standard tiers of drugs (generics, preferred, non-preferred, and lifestyle), we began asking firms about cost sharing for a drug tier that covers only specialty drugs. This new tier pathway in the questionnaire has an effect on the trend of the four standard tiers, since respondents to the 2015 survey might have previously categorized their specialty drug tier as one of the other four standard tiers. We did not modify the question about the number of tiers a firm’s cost-sharing structure has, but in cases in which the highest tier covered exclusively specialty drugs we reported it separately. For example, a firm with three tiers may only have copays or coinsurances for two tiers because their third tier copay or coinsurance is being reported as a specialty tier. Furthermore, in order to reduce survey burden, firms were asked about the plan attributes of only their plan type with the most enrollment. Therefore, in most cases, we no longer make comparisons between plan types. Lastly, prior to 2016, we required firms’ cost sharing tiers to be sequential, meaning that the second tier copay was higher than the first tier, the third tier was higher than the second, and the fourth was higher than the third. As drug formularies have become more intricate, many firms have minimum and maximums attached to their copays and coinsurances, leading us to believe it was no longer appropriate to assume that a firm’s cost sharing followed this sequential logic.

In cases where a firm had multiple plans, they were asked about their strategies for containing the cost of specialty drugs for the plan type with the largest enrollment. Between 2015 and 2016, we modified the series of ‘Select All That Apply’ questions regarding cost containment strategies for specialty drugs. In 2016, we elected to impute firms’ responses to these questions. We removed the option “Separate cost sharing tier for specialty drugs” and added specialty drugs as their own drug tier questionnaire pathway. We added question options on mail order drugs and prior authorization.

In 2016, we modified our questions about telemedicine to clarify that we were interested in the provision of health care services, and not merely the exchange of information, through telecommunication. We also added dependent and spousal questions to our health risk assessment question pathway.

For more detail about the 2016 survey, see the Survey Methodology section of that year’s report.

2017

While the Kaiser/HRET survey similar to other employer and household surveys has seen a general decrease in response rates over time, the decrease between the 2016 and 2017 response rates is not solely explained by this trend. In order to improve statistical power among sub-groups, including small firms and those with a high share of low income workers, the size of the sample was expanded from 5,732 in 2016 to 7,895 in 2017. As a result, the 2017 survey includes 204 more completes than the 2016 survey. While this generally increases the precision of estimates (for example, a reduction in the standard error for the offer rate from 2.2% to 1.8%), it has the effect of reducing the response rate. In 2017, non-panel firms had a response rate of 17%, compared to 62% for firms that had participated in one of the last two years.

To increase response rates, firms with 3-9 employees were offered an incentive for participating in the survey. A third of these firms were sent a $5 Starbucks gift card in the advance letter, a third were offered an incentive of $50 in cash or as a donation to a charity of their choice after completing the full survey, and a third of firms were offered no incentive at all. Our analysis does not show significant differences in responses to key variables among these incentive groups.

In 2017, weights were not adjusted using the nonresponse adjustment process described in previous years’ methods. As in past years, Kaiser/HRET conducted a small follow-up survey of those firms with 3-49 workers that refused to participate in the full survey. Based on the results of a McNemar test, we were not able to verify that the results of the follow-up survey were comparable to the results from the original survey. In 2010 and 2015, the results of the McNemar test were also significant and we did not conduct a nonresponse adjustment.

To reduce the length of survey, in several areas, including stoploss coverage for self-funded firms and cost sharing for hospital admissions, outpatient surgery, and emergency room visits, we revised the questionnaire to ask respondents about the attributes of their largest health plan rather than each plan type they may offer. This expands on the method we used for prescription drug coverage in 2016. Therefore, for these topics, aggregate variables represent the attributes of the firm’s largest plan type, and are not a weighted average of all of the firms plan types. In previous surveys, if a firm had two plan types, one with a copayment and one with a coinsurance for hospital admissions, the covered worker weight was allotted proportionally toward the average copayment and coinsurance based on the number of covered workers with either feature. With of this change, comparison among plans types is now a comparison of firms where any given plan type is the largest. The change only affects firms that have multiple plan types (58% of covered workers). After reviewing the responses and comparing them to prior years where we asked about each plan type, we find that the information we are receiving is similar to responses from previous years. For this reason, we will continue to report our results for these questions weighted by the number of covered workers in responding firms.

Starting in 2017, respondents were allowed to volunteer that their plans did not cover outpatient surgery or hospital admissions. Less than 1% of respondents indicated that their plan did not include coverage for these services. Cost sharing for hospital admissions, outpatient surgery and emergency room visits was imputed by drawing a firm similar in size and industry within the same plan type.

For more detail about the 2017 survey, see the Survey Methodology section of that year’s report.

2018

As in past years, we conducted a small follow-up survey of those firms with 3-49 workers that refused to participate in the full survey. Based on the results of a McNemar test, we were not able to verify that the results of the follow-up survey were comparable to the results from the original survey, and weights were not adjusted using the nonresponse adjustment process described in previous years’ methods. In 2010, 2015, and 2017, the results of the McNemar test were also significant and we did not conduct a nonresponse adjustment.

In light of a number of regulatory changes and policy proposals, we included new questions on the anticipated effects of the ACA’s individual mandate penalty repeal on the firm’s health benefits offerings, and the impact of the delay of the high cost plan tax, also known as the Cadillac tax, on the firm’s health benefits decisions. Also new in 2018 are questions asking about smaller firms’ use of level-funded premium plans, an alternative self-funding method with integrated stop loss coverage and a fixed monthly premium.

In 2018, we moved the battery of worker demographics questions from near the beginning of the survey to the end of the survey in an effort to improve the flow. There is no evidence that this move has impacted our survey findings and we will continue to monitor any suspected impacts.

The 2018 survey also expands on retiree health benefits questions, asking firms about cost reduction strategies, whether they contribute to the cost of coverage, and how retiree benefits are offered (e.g., through a Medicare Advantage contract, a traditional employer plan, private exchange, etc.).

Starting in 2018, we allowed respondents who did not know the combined maximum incentive or penalty an employee could receive for health screening and/or wellness and health promotion to answer a categorical question with specified ranges. This method is consistent with how we handle the percent of low-wage and high-wage workers at a firm. In 2018, 18% of respondents did not know the dollar value of the their incentive or penalty and 39% were able to estimate a range.

Starting in 2018, the survey began asking small firms who indicated that their plan was fully-insured whether the plan was level-funded. In a level-funded plan, employers make a set payment each month to an insurer or third party administrator which funds a reserve account for claims, administrative costs, and premiums for stop-loss coverage. These plans are often integrated and firms may not understand the complexities of the self-funded mechanisms underlying them. Some small employers who indicate that their plan is self-funded may also offer a plan that meets this definition. Respondents offering level funded plans were asked about any attachment points applying to enrollees. These firms were not less likely to answer this question, and including them doesn’t not substantially change the average. Prior to 2018, all firms reporting coverage as underwritten by an insurer were excluded from the stop-loss calculations.

The response option choices for the type of incentive or penalty for completing biometric screening or a health risk assessment changed from 2017 to 2018.

For more detail about the 2018 survey, see the Survey Methodology section of that year’s report.

2019

Starting in 2019, we discontinued a weighting adjustment informed by a follow-back survey of firms with 3-49 workers that refused to participate in the full survey. This adjustment was intended to reduce non-response bias in the offer rate statistic, under the assumption that firms that did not complete the survey were less likely to offer health benefits. The adjustment involves comparing the distribution of offering to non-offering firms in the full survey and the follow-back sample in the three smallest size categories (3-9, 10-24, 25-49). The adjustment is based on the differences between the two groups of firms and generally operates to adjust the weights of offering firms and non-offering firms to bring the counts closer together. However, if the distributions of the two groups differ to a statistically significant extent, we consider the follow-back survey to be a different population from the full survey and do not make any adjustment to the weights.

Although we cannot be sure of the reason, we are no longer witnessing the systematic upward bias on estimates for the offer rates of small firms that gave rise to the adjustment. Looking at the decade from 2010 to 2019, offer rates among firms responding to the follow-up survey have been higher for five of ten surveys. Firms with 3-49 employees responding to this follow-up survey have reported a higher offer rate than the full EHBS survey during the 2014, 2016, 2017, 2018, and 2019 surveys. An alternative way to measure non-response bias is to compare estimates throughout the fielding period.

In 2019, the percent of firms offering health benefit was similar in the last month of fielding to offer rates throughout the entire fielding period. Changes in both the survey methodology and the health insurance market have led us to become increasingly cautious about assuming that the follow back survey is a suitable proxy for the true population. Since 2014, we have collected offer rate information from firms before a final disposition is assigned. This method was introduced to reduce a bias in which firms who offer health benefits face a longer average survey than non-offering firms. This had the effect of increasing the percentage of firms for whom contact was made from whom we collected offer rate information. Additionally, we have also attempted to reduce non-response bias by increasing our data collection.